How to install DeepSeek locally using a Raspberry Pi

You were worried about ChatGPT taking your job, but OpenAI just got its job replaced by AI instead. Enter DeepSeek, the latest open-source large language model (LLM) that’s shaking up the AI world. If you haven’t heard of it yet, you will soon—because it’s making waves as the biggest competitor to OpenAI.

Unlike OpenAI’s models, DeepSeek is fully open-source. That means it challenges OpenAI’s entire business model. Why pay for ChatGPT when you can get similar (or even better) results for free?

What is DeepSeek?

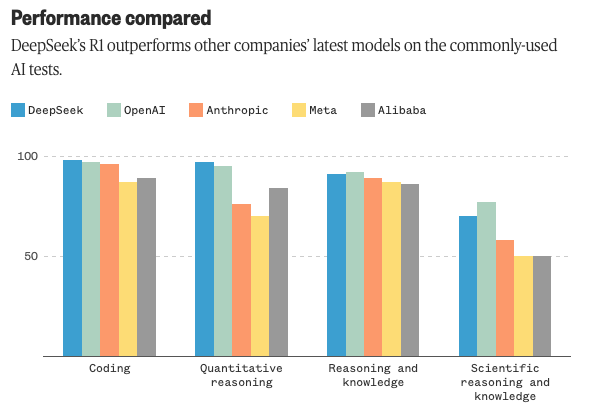

DeepSeek is a new LLM that claims to be faster and more resource-efficient than OpenAI and Anthropic’s offerings. Despite its superior performance, it doesn’t rely on the latest, most expensive NVIDIA chips. Even more impressively, it began as a side project! I wish my side projects were this ambitious.

How Did DeepSeek Achieve This?

DeepSeek’s success comes down to better engineering rather than brute force. It optimizes two key phases:

- Pre-Training: This phase teaches the model a broad understanding of language by training it on vast amounts of text data.

- Post-Training: This fine-tuning phase ensures the model is aligned, safe, and accurate, rather than just relying on raw computing power.

The result? A high-performing AI model that doesn’t require top-tier hardware.

Try DeepSeek for Yourself

You can test DeepSeek right now at deepseek.com. However, keep in mind that anything you type there is processed on servers in China. If privacy is a concern, you should opt out of data collection (if the option is available). But here’s the real advantage of open-source: you can host DeepSeek locally—something you can’t do with ChatGPT.

Different DeepSeek Flavors

DeepSeek comes in multiple versions, each with different parameter sizes. Here’s a quick breakdown:

- **DeepSeek-R1 (671B parameters)** – The most powerful version, but requires **serious hardware**.

- **DeepSeek Qwen (70B parameters)** – A distilled version, making it more efficient.

- **DeepSeek 1.5B (1.5 billion parameters)** – A lightweight version suitable for local use.

- **DeepSeek-R1 (671B parameters)** – The most powerful version, but requires **serious hardware**.

- **DeepSeek Qwen (70B parameters)** – A distilled version, making it more efficient.

- **DeepSeek 1.5B (1.5 billion parameters)** – A lightweight version suitable for local use.

For this tutorial, we’ll be using the 1.5B Distill version, which runs efficiently on limited hardware.

Running DeepSeek 1.5B on a Raspberry Pi 5

In this guide, we’ll install DeepSeek 1.5B on a Raspberry Pi 5 using Ollama. While a Pi 5 is sufficient, you’ll get better performance on a laptop or desktop.

Installing Ollama

First, ensure your system is up to date:

sudo apt update && sudo apt upgrade -y

Next, install Ollama:

curl -fsSL https://ollama.com/install.sh | sh

After installation, you may see a warning about no GPU detected—this just means it will run on your CPU instead.

Downloading the 1.5B Model

DeepSeek models are available on the Ollama library. To download and run the 1.5B version, use:

ollama run deepseek-r1:1.5b

That’s it! You now have DeepSeek running locally on your machine.

Some stats: (It does squeeze every ounce of cpu resources from the pi5 while running a single query)

Next Steps: Checking for Rogue Connections

One major advantage of running an AI model locally is data privacy. But how can we be sure it’s not making unexpected network connections? I plan to analyze its behavior using Wireshark to verify that it operates fully offline.

Stay tuned for those results! Meanwhile, enjoy experimenting with DeepSeek.